I recently decided I was going to take the rules of the board game Forbidden Island and write them up as code.

I guess that sounds like a weird thing to just decide to do, doesn’t it? It’s actually one part of a bigger goal I have at the moment of teaching myself some practical machine learning. As part of this journey, I heard a great idea from YouTuber Jabrils to set yourself a significant challenge that you’re interested in, and to work towards surmounting that challenge. For Jabrils, his challenge was getting an AI to control a Forrest Gump character to run around a course in a game. For my challenge, I’ve decided I’d like to build an AI that can play Forbidden Island. (And win!)

I guess that sounds like a weird thing to just decide to do, doesn’t it? It’s actually one part of a bigger goal I have at the moment of teaching myself some practical machine learning. As part of this journey, I heard a great idea from YouTuber Jabrils to set yourself a significant challenge that you’re interested in, and to work towards surmounting that challenge. For Jabrils, his challenge was getting an AI to control a Forrest Gump character to run around a course in a game. For my challenge, I’ve decided I’d like to build an AI that can play Forbidden Island. (And win!)

Obviously, if you’re going to have an AI play a game, you first need a digital version of the game for the AI to play. I knew writing up the rules of the game would be a bit of a distraction, but I decided to give it a crack anyway. I’d been making some good progress with the fast.ai machine learning course, but it was hard work and I thought this would be a fun detour.

It ended up taking quite a bit longer than I anticipated. While I was originally only planning to encode the game rules into code, I was eventually seduced by the idea of writing a program that could play the game. Turning my own automatic thoughts while playing the game into code that could automatically execute was quite the challenge. Here’s the top nine things I learned on this journey:

Learning #9: Kotlin is an awesome programming language

I wrote the code for this project in Kotlin. I’ve been using Kotlin at work for about six months now, practicing with it in my spare time, and I’ve really gotten to like it. Now, when you’re doing something that Kotlin has pretty much baked into the language, like creating a data class or filtering a list, it has obvious benefits. I find that a lot of other code written in a business setting, though, isn’t complicated enough to really drive home the benefits of having a strongly functional-leaning language.

With this project, however, I saw Kotlin really come to the fore. There were a number of times where I was able to encode relatively complex logic in just two or three lines, and I sat back and thought, “Man, if I had to write that in Java, it’d be gnarly and noisy and all kinds of gross.” I made advantageous use of sealed classes, smart casts and chaining of advanced collection functions (without learning a whole new collection library), while at the same time leveraging some of the best tools available for Java, like JUnit 5 and AssertJ. In fact, I did wonder sometimes whether, if I had have started coding it in Java instead of Kotlin, whether I would have just given up half way in out of frustration.

Learning #8: The rules of simple games are actually really complex

Forbidden Island isn’t what I’d call a complex game. It’s probably on the same level as Monopoly. Sure, it takes 20 minutes to read and understand the rules the first time you play, but after that you can teach it to a 6 year old and they can play it pretty proficiently.

And yet, turning these 7 pages of rules into code turned out to be a major undertaking. I replicated them with 854 lines of fairly terse functional code, and 2,783 lines of tests. That’s a fairly solid bulk of computer instructions, especially considering the instructions manage to describe the same functionality in less than 200 lines.

Learning #7: The written rules of a game may be ambiguous

At least twice while coding the rules, I found myself needing to decide between one implementation and another, but with the rules not specifying which was correct. I ended up googling the ambiguity and finding that other people had run into the same problem (likely while playing, rather than coding), and that the designer of the game had resolved the ambiguity online.

At least twice while coding the rules, I found myself needing to decide between one implementation and another, but with the rules not specifying which was correct. I ended up googling the ambiguity and finding that other people had run into the same problem (likely while playing, rather than coding), and that the designer of the game had resolved the ambiguity online.

This is an example of a phenomenon I’ve observed many times at work, where code forces us to be utterly precise about all possibilities, but those who are dictating the rules (or the requirements, as the case may be) haven’t necessarily considered every possibility – it’s only when we try to turn what they have prescribed into explicit machine instructions covering all cases that we uncover unknown branches.

Learning #6: Humans don’t consider all the possibilities

Part of the job of implementing this game as code was to implement a function that returns all of the possible actions for a given state. By the time I’d finished, I was astounded first at the complexity of how much code this function required, and secondly by its output: it’s not an unusual scenario for the function to return over 60 possible actions that could be taken. Certainly when I’ve played the game, I’ve never thought to myself, “Hmmm. I have 60 possible moves here. Let me examine them one by one.” The code showed me that when humans play the game, we must be doing something which the computer isn’t able to: we’re taking shortcuts.

'When humans play a game, we must be doing something which a computer isn’t able to do: we’re taking shortcuts' Share on XLearning #5: Our brains are fantastic at matching patterns and ruling out large swathes of options

The reason I don’t think about all of the possible options at any one time is because I’m subconsciously aware that many of the options are crap! When playing a game like this, the human mind often has a handful of current objectives in mind, and I reckon our subconscious is doing pattern matching of the options against those objectives. Unlike the computer, I’m searching for good things to do, not all things I can do. There’s a plethora of bad options that are ruled out by my brain before I ever think about them, and so only the potentially advantageous ones bubble up to my conscious for me to make a detailed comparison.

Learning #4: Getting statistical significance can be really challenging

When you create a program that’s supposed to play a game, the only way to know if it’s any good is to have it play lots of games. As you iterate on the solution, it then becomes important to be able to check whether your changes are improving the solution or making it worse. At first, this was easy. I started with a completely random game player and added in simple rules that had obvious impacts on the results. I had the code play 1,000 random games in each of a variety of different configurations. I picked 1,000 because I’d written a small program that tested the variance of the random game player at different batch sizes and found that, at around 1,000, the variance between batches had essentially converged.

As I got further along, though, I found that sometimes my changes made little improvement in the results and at times, beyond all sense, a new, important rule made the results worse. For a while I chose to remain perplexed about these anomalies and just backed out the seemingly-bad ideas. After it happened a number of times, though, I got curious. It occurred to me to run the variance check code against my rule-based automaton. Can you guess what I found? When the rule-based game player was playing random batches of 1,000 games, the variance in the results between batches was massive! In fact, the variance of the rule-based player only converged once I increased the batch size to 32,000. When I had added a rule and seen the results get worse, all I was actually seeing was statistical noise! Worse even than the rules I’d left out, was that many of the rules where I’d seen slight improvements had probably also just been statistical noise. This didn’t mean they were duds, but I had no trustworthy data for showing they weren’t. I thought 1,000 random games was a lot, but it turns out getting a statistically significant sample across something as complex as a board game-playing automaton requires a huge mount of data points.

Learning #3: Simple rules don’t win games

I worked for several weeks on my rule-based game player. I spent most train rides to work on it. I spent many hours at night on it. I was knowingly but complicitly over-excited by it. My wife got sick of hearing about it. And what do you think all this effort got me? Well… it can win… some games. Sadly, it’s nowhere near the amazing solution I might have imagined it would be if you’d asked me before I started what sort of results I could get by spending that much time coding a solution. When my automaton plays the easiest of easy configurations of the game, its rules-based approach can win an impressive (*cough*) 20% of games! On the hardest of hard configurations, it wins just 0.2%. Compare that to my own results: I think I’ve played the game six or seven times on the easiest configuration, and my win rate is 100%.

I worked for several weeks on my rule-based game player. I spent most train rides to work on it. I spent many hours at night on it. I was knowingly but complicitly over-excited by it. My wife got sick of hearing about it. And what do you think all this effort got me? Well… it can win… some games. Sadly, it’s nowhere near the amazing solution I might have imagined it would be if you’d asked me before I started what sort of results I could get by spending that much time coding a solution. When my automaton plays the easiest of easy configurations of the game, its rules-based approach can win an impressive (*cough*) 20% of games! On the hardest of hard configurations, it wins just 0.2%. Compare that to my own results: I think I’ve played the game six or seven times on the easiest configuration, and my win rate is 100%.

I don’t think this in any way shows that it’s impossible for a computer to be good at winning this game (I would say “good” is best defined as significantly more than half the time). What it does show, though, is that games like this are not easily won by simple rules of the form, “if the game currently matches this state, take this action”. We might feel like that’s what we’re doing when deciding how to play a game sometimes, but there’s actually a lot more to it. We’re aware of what might happen in the next few turns after this one. We can recognise things that have already happened in the game which might affect the future state. We’re able to form medium-term objectives and stick at working towards them, while also being able to abandon them if we see disaster approaching. Following objectives, recalling history and making forward projections are all things that could be added to an automated game player, albeit with an ever-increasing complexity. Isn’t it fascinating, though, that as humans we do these things almost without thinking?

Learning #2: Games are fascinating

You sit down to play a board game. You spend maybe 5-20 minutes reading the rules. It seems pretty simple. You play through it, find a few challenges and how to overcome them, learn what the key mechanics are and how to manipulate them for your own benefit. Most of the games we play are simple and fun. Except they’re not. I mean, they are fun, but they’re not simple. They appear simple to a human mind, but many games are complex enough that computing solutions to them is a significant challenge.

So great is this challenge that games have given their name to a whole branch of mathematics called game theory, which actually extends well beyond social games to analyse a vast array of situations involving rational, strategic interaction: it’s the maths of decision-making. In fact, many games and related problems are so complex that we have trouble understanding exactly how complex they are. What does that mean? One of the most significant unsolved problems in computer science is determining whether problems where solutions are easily verified can also be easily solved (where “easily” means the verification or solution can be completed in a time that doesn’t grow exponentially as the problem size grows). Here’s a great video that explains it in more detail. In short, though, we don’t yet know enough about the maths of games to be able to definitively say that some of them are either easily solved or not easily solved. If that sounds like an interesting problem itself, maybe you should have a crack at it: there’s $1 million up for grabs if you solve it.

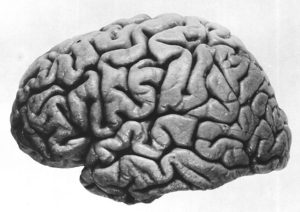

Learning #1: There is a computer made of meat in your head and it’s ridiculously powerful

As I’ve ploughed through this exercise and reflected on it in a way that produced all of the above realisations, one thing kept becoming clearer and clearer: the human brain is amazing. We’ve probably all heard that or said it many times during our lives, but I think we still generally under-appreciate the complexity of what our brain does and the ease with which it does it. Sure, a computer can multiply two ten-digit numbers then find the square root faster than we can take a breath, but humans can do things like cross the road at Shibuya without bumping into anyone using only subtle cues like body position and eye contact. Give that as a goal to an AI robotics team and watch them laugh. And how do we accomplish such complex feats? With electrically-charged meat!

As I’ve ploughed through this exercise and reflected on it in a way that produced all of the above realisations, one thing kept becoming clearer and clearer: the human brain is amazing. We’ve probably all heard that or said it many times during our lives, but I think we still generally under-appreciate the complexity of what our brain does and the ease with which it does it. Sure, a computer can multiply two ten-digit numbers then find the square root faster than we can take a breath, but humans can do things like cross the road at Shibuya without bumping into anyone using only subtle cues like body position and eye contact. Give that as a goal to an AI robotics team and watch them laugh. And how do we accomplish such complex feats? With electrically-charged meat!

I’ll probably try some other approaches to creating a Forbidden Island solver in the future. At this point, I don’t know how successful I’ll be, or even how to calculate what would be a good result compared to a poor one. However, no matter how close I get to the perfect computer player, there will always be one difference between my brain and my laptop. While a program may eventually achieve 100% success at Forbidden Island, only a human brain will reach the end of a game – won or lost – and think to itself, “That was fun”.

Want to try your hand at solving Forbidden Island with code?

All the code I’ve written so far as part of this project is available on GitHub. I’ve structured the code to make it easy for others to write their own game-playing automata, and there’s instructions and tips for how to do it in the README. If you give it a go, make sure you get in touch and let me know how you went!

Image credits:

‘Cool JavaScript Code at Game Jam‘ by Iwan Gabovitch

‘Ambiguous sign‘ by Ian Capper

‘Coin Toss‘ by ICMA Photos

‘Right hemisphere of J. Piłsudski’s brain, lateral view‘ by Mózg Józefa Piłsudskiego